Introduction

Testing is a core part of the app development process. By running tests against your application, you can verify its correctness, functional behavior, and usability before you release it.

You can manually test your app by navigating through it. However, manual testing scales poorly. On the other hand, automated testing is done programmatically and is faster, more repeatable, and generally gives more handy feedback about your app during the development process.

Types of tests

There are many types of tests based on subjects and scopes.

Subject & Scope

The subject-based tests responds to specific questions about the app:

- Functional testing: test if the app does what it’s supposed to

- Performance testing: test if the app works quickly and efficiently

- Accessibility testing: test if the app works well with accessibility services (A.K.A.

a11y) - Compatibility testing: test if the app works well on every device and API level

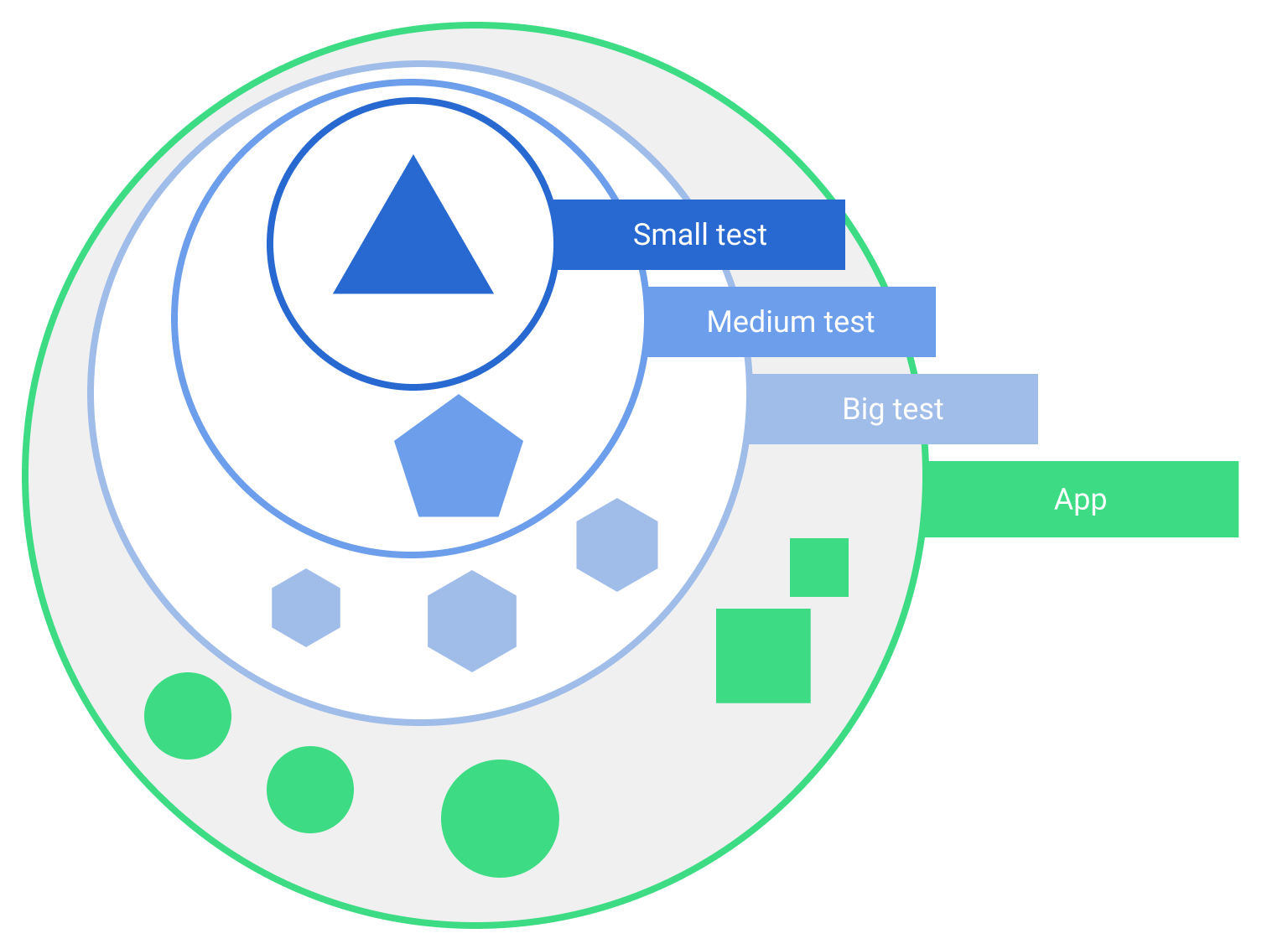

Tests also depends on size, or degree of isolation:

- Unit tests (small tests): verify a very small portion of the app, such as a method or class

- Integration test (medium tests): check the integration between two or more units

- End-to-end (big tests): verify larger parts of the app at the same time, such as a whole screen or user flow

Figure 1: Test scopes in a typical application.

Figure 1: Test scopes in a typical application.

Instrumented VS local

The most important distinction for tests is where they run.

Instrumented tests

Run on an Android device (either physical or emulated). The app is built and installed alongside a test app that injects commands and reads the state. Instrumented tests are usually UI tests: launch the app and interacting with it.

Local tests

Also called host-side tests: are execute on the development machine or on a server. They’re usually small and fast, isolating the subject under test from the rest of the app.

In the gray scale of tests there are some intersections: not all unit tests are local, and not all end-to-end tests run on a device. For instance:

- Big local test: use an Android simulator that runs locally, such as Robolectric.

- Small instrumented test: verify that your code works well with a framework feature, such as a noSQL database.

Testing strategy

The hard part of the job is to find an appropriate balance between the fidelity, speed and reliability of tests.

Testable architecture

Testable architectures have a better readability, maintainability, scalability, and re-usability.

An architecture that is not testable produces bigger, slower, more flaky tests. In addition, classes that can’t be unit-tested might have to be covered by bigger integration tests or UI tests. Moreover, non-testable architecture gives fewer opportunities for testing different scenarios.

Keep in mind that bigger tests are slower, so testing all possible states of an app might be unrealistic.

Decoupling

Decoupling is the practice of extracting part of a function, class, or module from the rest and testing it. It becomes easier and more effective to test.

Common decoupling techniques include:

- Split an app into layers such as Presentation, Domain, and Data. You can also split an app into modules, one per feature.

- Avoid adding logic to entities that have large dependencies, such as activities and fragments. Use these classes as entry points to the framework and move UI and business logic elsewhere, such as to a

Composable,ViewModelor domain layer. - Avoid direct framework dependencies in classes containing business logic. For example, don’t use Android Contexts in ViewModels.

- Make dependencies easy to replace. For instance, use interfaces instead of concrete implementations. Use Dependency injection (DI) even if you don’t use a DI framework.

What to test

Organization of test directories

A project in Android Studio contains two directories that hold tests depending on their execution environment:

androidTestdirectory contains instrumented tests such as:- integration tests,

- end-to-end tests

- other tests where the JVM alone cannot validate your app’s functionality

- The

testdirectory contains local tests: unit tests. These can run on a local JVM.

Unit test

Following best practice should ensure you use unit tests in the following cases. Unit tests for:

ViewModelsor presenters- data layer: most of the data layer should be platform-independent

- other platform-independent layers such as the Domain layer, as with use cases and interactors

- utility classes such as string manipulation and math

For the point 2 see test doubles.

Edge Cases

Unit tests should also focus on edge cases: uncommon scenarios that are unlikely to catch:

- math operations using negative numbers, zero, and boundary conditions

- network connection errors

- corrupted data, such as malformed JSON

- simulating full storage when saving to a file

- object recreated in the middle of a process (such as an activity when the device is rotated)

Tests to avoid

As wrote above, you should not test everything. You should avoid low value tests like tests that verify the correct operation of the framework or a library (not your code).

Moreover, framework entry points such as activities, fragments, or services should not have business logic so unit testing shouldn’t be a priority. Unit tests for activities have little value, because they would cover mostly framework code, and they require a more involved setup. Instrumented tests such as UI tests can cover these classes.

UI tests

There are several types of UI tests you should employ:

Screen UI tests

Check critical user interactions in a single screen. Perform actions such as clicking on buttons, typing in forms, and checking visible states. One test class per screen is a good starting point.

Navigation tests

A.K.A. user flow tests, covering most common paths. These tests simulate a user moving through a navigation flow. They are simple tests, useful for checking for run-time crashes in initialization.

Please Note: Test coverage is a metric that can be calculated: it indicates how much of your code is visited (covered) by your tests. It can detect untested portions of the codebase, but it should not be used as the only metric to claim a good testing strategy.

Make tests - notes

An assertion is the core of your test. It’s a code statement that checks that your code or app behaved as expected.

AndroidX Test is a collection of libraries for testing. It includes classes and methods that give you versions of components like Applications and Activities, that are meant for tests. As an example, this code you wrote is an example of an AndroidX Test function for getting an application context. One of the benefits of the AndroidX Test APIs is that they are built to work both for local tests and instrumented tests. This is nice because:

- You can run the same test as a local test or an instrumented test.

- You don’t need to learn different testing APIs for local vs. instrumented tests.

The simulated Android environment that AndroidX Test uses for local tests is provided by Robolectric. Robolectric is a library that creates a simulated Android environment for tests and runs faster than booting up an emulator or running on a device. Test-runner docs

To test LiveData it’s recommended to:

- Use InstantTaskExecutorRule, which is a JUnit Rule

- Ensure

LiveDataobservation

InstantTaskExecutorRule is a JUnit Rule.

When you use it with the @get:Rule annotation, it causes some code in the InstantTaskExecutorRule class to be run before and after the tests.

This rule runs all Architecture Components-related background jobs in the same thread so that the test results happen synchronously, and in a repeatable order.

When you write tests that include testing LiveData, use this rule!

@RunWith(AndroidJUnit4::class)

class TasksViewModelTest {

@get:Rule

var instantExecutorRole = InstantTaskExecutorRule()

@Test

fun addNewTask_setNewTaskEvent() {

val tasksViewModel = TasksViewModel(ApplicationProvider.getApplicationContext())

tasksViewModel.addNewTask()

// Docs: https://medium.com/androiddevelopers/livedata-with-snackbar-navigation-and-other-events-the-singleliveevent-case-ac2622673150

// new task event is triggered

val value = tasksViewModel.newTaskEvent.getOrAwaitValue()

assertThat(value.getContentIfNotHandled(), (not(nullValue())))

}

/**

* Sets the filtering mode to `ALL_TASKS` and asserts that the `tasksAddViewVisible` LiveData is `true`.

*/

@Test

fun addNewTask_taskAddViewVisible() {

val tasksViewModel = TasksViewModel(ApplicationProvider.getApplicationContext())

// when the filter type is ALL_TASKS

tasksViewModel.setFiltering(TasksFilterType.ALL_TASKS)

val value = tasksViewModel.tasksAddViewVisible.getOrAwaitValue()

// then the "Add task" action is visible

assertThat(value, `is`(true))

}

}When you have repeated setup code for multiple tests, you can use the @Before annotation to create a setup method and remove repeated code.

Since all of these tests are going to test the TasksViewModel, and need a view model, move this code to this @Before block.

@RunWith(AndroidJUnit4::class)

class TasksViewModelTest {

private lateinit var tasksViewModel: TasksViewModel

/**

* Steps:

* 1. Create a `lateinit` instance variable used in multiple tests

* 2. Create this method annotated it with @Before

* 3. Write the view model instantiation code here

*/

@Before

fun setUp() {

tasksViewModel = TasksViewModel(ApplicationProvider.getApplicationContext())

}

}Utility test function:

/**

* Creates a Kotlin extension function called getOrAwaitValue which adds an observer, gets

* the LiveData value, and then cleans up the observer—basically a short, reusable

* version of the `observeForever` code.

*

* Docs: [Unit-testing LiveData and other common observability problems](https://medium.com/androiddevelopers/unit-testing-livedata-and-other-common-observability-problems-bb477262eb04)

*/

@VisibleForTesting(otherwise = VisibleForTesting.NONE)

fun <T> LiveData<T>.getOrAwaitValue(

time: Long = 2,

timeUnit: TimeUnit = TimeUnit.SECONDS,

afterObserve: () -> Unit = {}

): T {

var data: T? = null

val latch = CountDownLatch(1)

val observer = object : Observer<T> {

override fun onChanged(o: T?) {

data = o

latch.countDown()

this@getOrAwaitValue.removeObserver(this)

}

}

this.observeForever(observer)

try {

afterObserve.invoke()

// Don't wait indefinitely if the LiveData is not set.

if (!latch.await(time, timeUnit)) {

throw TimeoutException("LiveData value was never set.")

}

} finally {

this.removeObserver(observer)

}

@Suppress("UNCHECKED_CAST")

return data as T

}Other unit tests, which are not aware of Android environment:

/**

* making a local test because the tested function is doing math calculations and won't include any Android specific code

*/

class StatisticsUtilsTest {

/**

* This test that checks:

* - if there are no completed tasks and one active task,

* - that the percentage of active tests is 100%,

* - the percentage of completed tasks is 0%.

*

* **Please Note**: `@Test` annotation is mandatory.

*/

@Test

fun getActiveAndCompletedStats_noCompleted_returnsHundredZero() {

// 1. create an active task

val tasks = listOf<Task>(

Task("Make a test run", "Describe the task", false)

)

// 2. call the function

val result = getActiveAndCompletedStats(tasks)

// 3. check the result

// assert that there are no completed tasks

// assertEquals(result.completedTasksPercent, 0f)

// assert that all tasks are active

// assertEquals(result.activeTasksPercent, 100f)

// assertNotEquals(result.activeTasksPercent, 50f)

// --- more human readable ---

// assert that there are no completed tasks

assertThat(result.completedTasksPercent, `is`(0f))

assertThat(result.completedTasksPercent, not(50f))

// assert that all tasks are active

assertThat(result.activeTasksPercent, `is`(100f))

assertThat(result.activeTasksPercent, not(50f))

}

@Test

fun getActiveAndCompletedStats_empty_returnsZero() {

// call the function with empty list as parameter

val result = getActiveAndCompletedStats(emptyList())

assertThat(result.completedTasksPercent, `is`(0f))

assertThat(result.activeTasksPercent, `is`(0f))

}

@Test

fun getActiveAndCompletedStats_null_returnsZero() {

// call the function with empty list as parameter

val result = getActiveAndCompletedStats(null)

assertThat(result.completedTasksPercent, `is`(0f))

assertThat(result.activeTasksPercent, `is`(0f))

}

@Test

fun getActiveAndCompletedStats_noActive_returnsZeroHundred() {

val tasks = listOf(

Task("title", "desc", isCompleted = true)

)

// When the list of tasks is computed with a completed task

val result = getActiveAndCompletedStats(tasks)

// Then the percentages are 0 and 100

assertThat(result.activeTasksPercent, `is`(0f))

assertThat(result.completedTasksPercent, `is`(100f))

}

@Test

fun getActiveAndCompletedStats_both_returnsFortySixty() {

// Given 3 completed tasks and 2 active tasks

val tasks = listOf(

Task("title", "desc", isCompleted = true),

Task("title", "desc", isCompleted = true),

Task("title", "desc", isCompleted = true),

Task("title", "desc", isCompleted = false),

Task("title", "desc", isCompleted = false)

)

// When the list of tasks is computed

val result = getActiveAndCompletedStats(tasks)

// Then the result is 40-60

assertThat(result.activeTasksPercent, `is`(40f))

assertThat(result.completedTasksPercent, `is`(60f))

}

@Test

fun getActiveAndCompletedStats_error_returnsZeros() {

// When there's an error loading stats

val result = getActiveAndCompletedStats(null)

// Both active and completed tasks are 0

assertThat(result.activeTasksPercent, `is`(0f))

assertThat(result.completedTasksPercent, `is`(0f))

}

@Test

fun getActiveAndCompletedStats_empty_returnsZeros() {

// When there are no tasks

val result = getActiveAndCompletedStats(emptyList())

// Both active and completed tasks are 0

assertThat(result.activeTasksPercent, `is`(0f))

assertThat(result.completedTasksPercent, `is`(0f))

}

}Conclusion

No conclusion yet, this post is a work in progress!